Running DeepSeek-R1 on refubrished blade server: 01 Introduction

"Intro"

So I started to look into ways to self-host LLMs, including bigger ones like R1.

Let's start by setting some targets for this project.

- Speed > 0.5t/s (while this considered slow-ish, my AI workflow is usually write prompt -> forget about it for half an hour -> check)

- Doesn't cost my yearly salary

- 512 Gb ram/vram

Extended goals/nice to haves:

- 1024 Tb ram/vram

- Running server or farm doesn't cost more per month then chatgpt subscription (20$)

- Small (at least doesn't occupy 4u server rack.)

"Lets see our options"

I decided to rate options in 6 categories:

- Cost

- Total memory

- Speed

- Power required

- Bulkiness

- Looks

- Partner approval factor

GPU-only server

External link to nvidianews.nvidia.com

External link to nvidianews.nvidia.com

Using something like Nvidia HGX A100 (with 8x80gb VRAM)

Cost: Arm, leg, house, and everything you probably own x2

Total memory: 1Tb+ can fit on two of those

Speed: 5/5 (Parallel inference possible)

Power consumption: 3/5 (4-5 kW)

Bulkiness: 4/5 (2-4u server equivalent)

Looks: 4/5 (Nvidia servers often look cool)

Partner approval factor: 0/5 ("No, we are not selling the house")

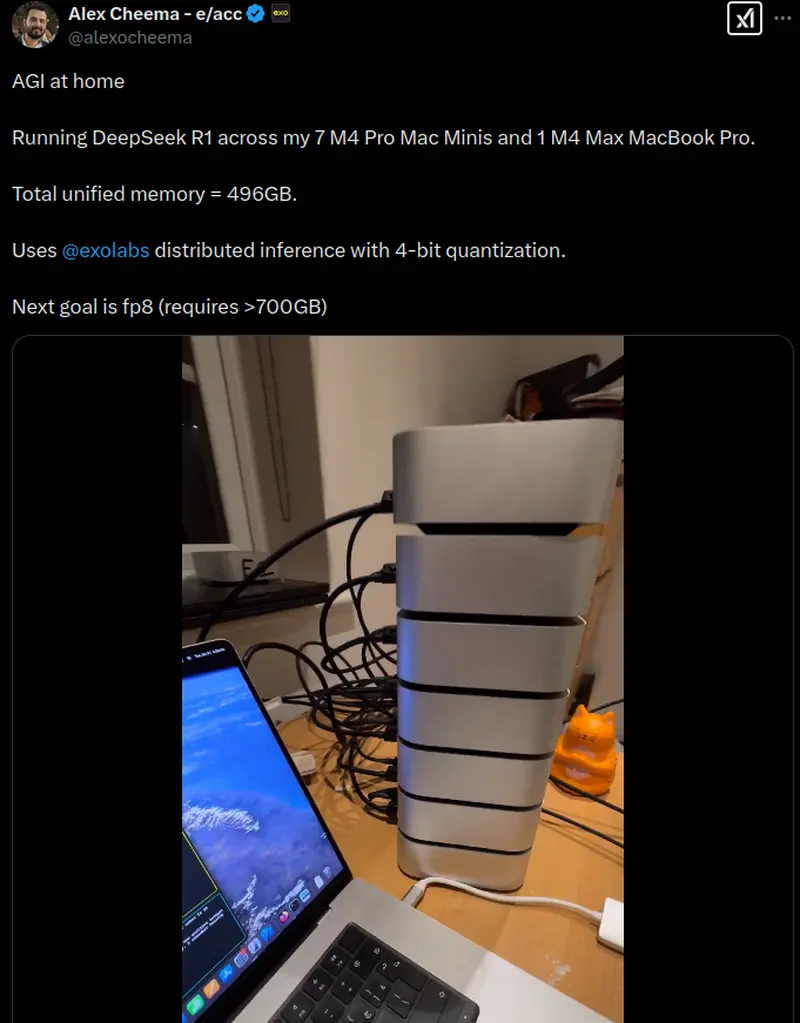

Use N mac studios with Exo to distribute weights

External link to x.com

External link to x.com

Cost: 24k+ (for 512Gb), 48k+ (for 1024 Gb)

Total memory: 1Tb+

Speed: 3/5

Power consumption: 3/5 (1.5kW)

Bulkiness: 3/5 (unstable)

Looks: 5/5 (it's mac, people will like it)

Partner approval factor: 1/5 (I don't know how to explain that I spent 50k for 16 mac minis)

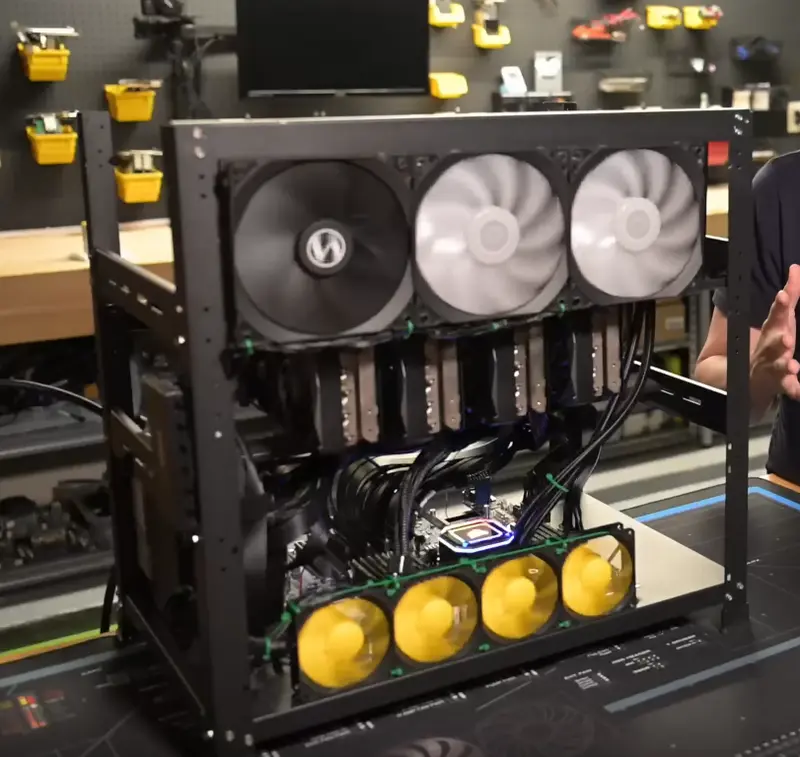

Multiple Used GPU in "server"

This rig, for example has 192gb of ram, my target would need at least 3 of those.

Cost: 20k+

Total memory: 1Tb+ will probably require multiple motherboards

Speed: 4/5 (Parallel inference possible)

Power consumption: 1/5 (Metric fuck ton)

Bulkiness: 5/5

Looks: 2/5 (Etherium miner in 2020)

Partner approval factor: 1/5 (I asked and was forbidden to occupy whole room with GPUs)

Using server motherboard. CPU inference

External link to digitalspaceport.com

External link to digitalspaceport.com

Cost: 2-3k

Total memory: 1Tb+

Speed: 2/5

Power consumption: 5/5 (~400w)

Bulkiness: 2/5

Looks: 3/5 (I like this techno-grunge, but for some people could look scary)

Partner approval factor: 4/5 ("Looks like overpowered gaming PC")

Blade server motherboard

Or as I like to call it: "Great, but can we make it cheaper"

After reviewing my options, I understood that getting server motherboard is my local optima. This category also has several sub options:

- Buy new motherboard with ATX power, new/used epyc or xeon CPU, ram, etc.

- Buy used 1u/2u server, possibly with 2 CPUs, psu, etc. Will only need to buy RAM, and maybe quiet-ish fans.

Since inference is memory-bandwidth limited, DDR3 and older goes out. 1/2u servers are still bulky and noisy, and this can't be fixed easily. Then when browsing ebay I saw that you can get 1u server blade for 20$ (CPU included) and they have several additional pros over regular server motherboard.

Cost: Memory and psu will be needed separetly, but cpu is included (Add 600$ for memory, 200$ for psu)

Total memory: 1Tb, theoretically 2 possible (If you can find 128Gb modules)

Bulkiness: 5/5 (it's basically half of 1u server), around 3-4 mac minis in length

Looks: 4/5 (Techno-grudge, but smaller == cuter, right?, like cats and dogs)

Partner approval factor: 4/5 (If you can make it at least not noisy)

Speed and power consumption stays the same as with server motherboards.

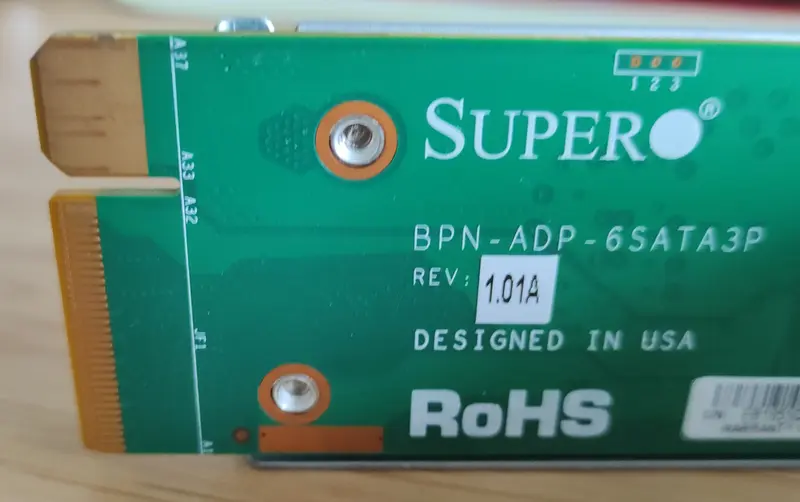

So, meet the specimen:

Supermicro X10DRT-P

- 2x Intel Xeon E5-2628L v3

- 16x DDR4 slots 64Gb can get to 1Tb (2Tb with 128Gb sticks)

- 1x SATA connector

- 1x 16x pci slot

- 1x 8x pci slot

- 2x 16x proprietary format pci slots

- 2x 1Gb ethernet

- 1Gb managment port

- VGA port

- 2x 3.0 USB

- 1x COM

- Cursed power/chassis connector

So, yes, this blade has 2 obvious problems: power and cooling.

Power is provided by proprietary Supermicro blade connector:

Some comments on reddit state that blades are impossible to use without the proper case because of communication protocol between them.

Some comments on reddit state that blades are impossible to use without the proper case because of communication protocol between them.

At the same time, there is a post. Where person is booting some blade server.

So at least some people are able to use blade without backplane.

Cooling in blade servers is usually up to case fans, but this motherboard has 2 exposed fan connectors, and hopefully I can hook up to them.

This is current state for now.

In next post I will go over power topic, and hopefully get to a first boot.

When next part is available, link to it will be here: Running DeepSeek-R1 on refubrished blade server: 02 Power.

Bonus section of exotic (dumb) ideas

"just because you can doesn’t mean you should"

Here is list of ideas that I come up with, but decided not to pursue (for different reasons). They are sorted in rising madness you need for implementation.

Use a several of NVMe drives and mmap

Plan: Get motherbord with a lot of nvme ports, buy N nvme disks with max IOPS.

Pros: Could be super cheap, and probably smallest of all proposed.

Cons: Even slower then optane, issues with random access speeds

Optane memory server

Plan: Buy optane-ram compatible server, 8 optane sticks with 128Gb.

Pros: 128Gb optane sticks cost like dirt

Cons: very slow, hard to get server that would support it (I wasn't able to source one for a reasonable price)

64x 16gb Raspberry PIs (or other SBC)

Plan: Get 3 of 48x1Gb switches, interconnect them, struggle, cry

Pros: You can become popular SBC blogger

Cons: Cost a lot of money, slow inference

A lot of smaller PCs with older gaiming rigs 16,32 Gb ones.

Plan: Raid every ebay listing, facebook marketplace and thrift shop build giant cluster out of them.

Pros: It's your chance to meet new people, you will see a lot of new places around the city (or even neighboring ones)

Cons: A lot of struggles to interconnect them, huge power consumption, constant hardware failures

LLM@Home

Plan: Organize community for people to distribute parts of the weights on their PCs, compute/combine.

Pros: Basically free (It's not you who will pay for hardware) and in batch inference actually could work (like how SETI@Home works).

Cons: Slow, unreliable, huge overhead

Tape drives

Plan: Get several older generation of tape drives, connect them in parallel, distribute weights between them.

Pros: Very comedic, getting 1 token per shuffles of tapes will make you appreciate speed of modern computers.

Cons: No cons, please do it, share your video or post with me, I want to see it.